Chi-square distribution

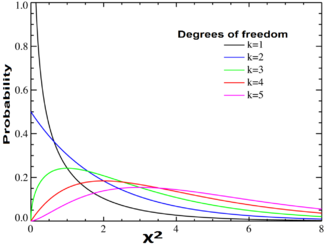

Probability density function |

|

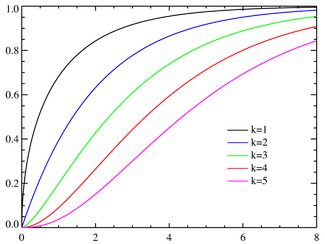

Cumulative distribution function |

|

| notation: |  or or  |

|---|---|

| parameters: | k ∈ N1 — degrees of freedom |

| support: | x ∈ [0, +∞) |

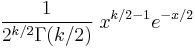

| pdf: |  |

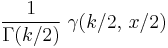

| cdf: |  |

| mean: | k |

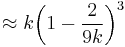

| median: |  |

| mode: | max{ k − 2, 0 } |

| variance: | 2k |

| skewness: |  |

| ex.kurtosis: | 12 / k |

| entropy: |  |

| mgf: |  for t < ½ for t < ½ |

| cf: |  [1] [1] |

In probability theory and statistics, the chi-square distribution (also chi-squared or χ²-distribution) with k degrees of freedom is the distribution of a sum of the squares of k independent standard normal random variables. It is one of the most widely used probability distributions in inferential statistics, e.g. in hypothesis testing, or in construction of confidence intervals.[2][3][4][5]

The best-known situations in which the chi-square distribution is used are the common chi-square tests for goodness of fit of an observed distribution to a theoretical one, and of the independence of two criteria of classification of qualitative data. Many other statistical tests also lead to a use of this distribution, like Friedman's analysis of variance by ranks.

The chi-square distribution is a special case of the gamma distribution.

Contents |

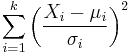

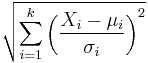

Definition

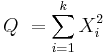

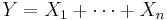

If X1, …, Xk are independent, standard normal random variables, then the sum of their squares

is distributed according to the chi-square distribution with k degrees of freedom. This is usually denoted as

The chi-square distribution has one parameter: k — a positive integer that specifies the number of degrees of freedom (i.e. the number of Xi’s)

Characteristics

Further properties of the chi-square distribution can be found in the box at right.

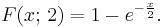

Probability density function

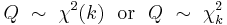

The probability density function (pdf) of the chi-square distribution is

where Γ(k/2) denotes the Gamma function, which has closed-form values at the half-integers.

For derivations of the pdf in the cases of one and two degrees of freedom, see Proofs related to chi-square distribution.

Cumulative distribution function

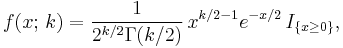

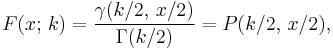

Its cumulative distribution function is:

where γ(k,z) is the lower incomplete Gamma function and P(k,z) is the regularized Gamma function.

In a special case of k = 2 this function has a simple form:

Tables of this distribution — usually in its cumulative form — are widely available and the function is included in many spreadsheets and all statistical packages. For a closed form approximation for the CDF, see under Noncentral chi-square distribution.

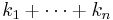

Additivity

It follows from the definition of the chi-square distribution that the sum of independent chi-square variables is also chi-square distributed. Specifically, if  are independent chi-square variables with

are independent chi-square variables with  degrees of freedom, respectively, then

degrees of freedom, respectively, then  is chi-square distributed with

is chi-square distributed with  degrees of freedom.

degrees of freedom.

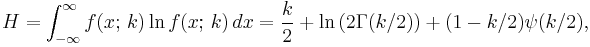

Information entropy

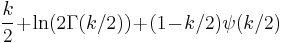

The information entropy is given by

where ψ(x) is the Digamma function.

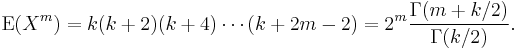

Noncentral moments

The moments about zero of a chi-square distribution with k degrees of freedom are given by[6][7]

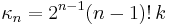

Cumulants

The cumulants are readily obtained by a (formal) power series expansion of the logarithm of the characteristic function:

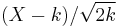

Asymptotic properties

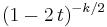

By the central limit theorem, because the chi-square distribution is the sum of k independent random variables, it converges to a normal distribution for large k (k > 50 is “approximately normal”).[8] Specifically, if X ~ χ²(k), then as k tends to infinity, the distribution of  tends to a standard normal distribution. However, convergence is slow as the skewness is

tends to a standard normal distribution. However, convergence is slow as the skewness is  and the excess kurtosis is

and the excess kurtosis is  .

.

Other functions of the chi-square distribution converge more rapidly to a normal distribution. Some examples are:

- If X ~ χ²(k) then

is approximately normally distributed with mean

is approximately normally distributed with mean  and unit variance (result credited to R. A. Fisher).

and unit variance (result credited to R. A. Fisher). - If X ~ χ²(k) then

![\scriptstyle\sqrt[3]{X/k}](/2010-wikipedia_en_wp1-0.8_orig_2010-12/I/6bd5442dd615e41c37b9f37030637ce1.png) is approximately normally distributed with mean

is approximately normally distributed with mean  and variance

and variance  (Wilson and Hilferty, 1931)

(Wilson and Hilferty, 1931)

Related distributions

A chi-square variable with k degrees of freedom is defined as the sum of the squares of k independent standard normal random variables.

If Y is a k-dimensional Gaussian random vector with mean vector μ and rank k covariance matrix C, then X = (Y−μ)TC−1(Y−μ) is chi-square distributed with k degrees of freedom.

The sum of squares of statistically independent unit-variance Gaussian variables which do not have mean zero yields a generalization of the chi-square distribution called the noncentral chi-square distribution.

If Y is a vector of k i.i.d. standard normal random variables and A is a k×k idempotent matrix with rank k−n then the quadratic form YTAY is chi-square distributed with k−n degrees of freedom.

The chi-square distribution is also naturally related to other distributions arising from the Gaussian. In particular,

- Y is F-distributed, Y ∼ F(k1,k2) if

where X1 ~ χ²(k1) and X2 ~ χ²(k2) are statistically independent.

where X1 ~ χ²(k1) and X2 ~ χ²(k2) are statistically independent.

- If X is chi-square distributed, then

is chi distributed.

is chi distributed. - X1 of k1, X2 of k2, and they are independent, then X1+X2 is of k1+k2, if they are not independent, then X1+X2 might not be chi square distributed.

Generalizations

The chi-square distribution is obtained from the sum of k independent, zero-mean, unit-variance Gaussian random variables. Generalizations of this distribution can be obtained by summing the squares of other types of Gaussian random variables. Several such distributions are described below.

Noncentral chi-square distribution

The noncentral chi-square distribution is obtained from the sum of the squares of independent Gaussian random variables having unit variance and nonzero means.

Generalized chi-square distribution

The generalized chi-square distribution is obtained from the quadratic form z′Az where z is a zero-mean Gaussian vector having an arbitrary covariance matrix, and A is an arbitrary matrix.

The chi-square distribution X ~ χ²(k) is a special case of the gamma distribution, in that X ~ Γ(k/2, 2) (using the shape parameterization of the gamma distribution).

Because the exponential distribution is also a special case of the Gamma distribution, we also have that if X ~ χ²(2), then X ~ Exp(1/2) is an exponential distribution.

The Erlang distribution is also a special case of the Gamma distribution and thus we also have that if X ~ χ²(k) with even k, then X is Erlang distributed with shape parameter k/2 and scale parameter 1/2.

Applications

The chi-square distribution has numerous applications in inferential statistics, for instance in chi-square tests and in estimating variances. It enters the problem of estimating the mean of a normally distributed population and the problem of estimating the slope of a regression line via its role in Student’s t-distribution. It enters all analysis of variance problems via its role in the F-distribution, which is the distribution of the ratio of two independent chi-squared random variables divided by their respective degrees of freedom.

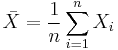

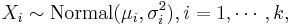

Following are some of the most common situations in which the chi-square distribution arises from a Gaussian-distributed sample.

- if

are i.i.d.

are i.i.d.  random variables, then

random variables, then  where

where  .

.

- The box below shows probability distributions with name starting with chi for some statistics based on

independent random variables:

independent random variables:

| Name | Statistic |

|---|---|

| chi-square distribution |  |

| noncentral chi-square distribution |  |

| chi distribution |  |

| noncentral chi distribution |  |

Table of χ² value vs P value

The P-value is the probability of observing a test statistic at least as extreme in a Chi-square distribution. Accordingly, since the cumulative distribution function (CDF) for the appropriate degrees of freedom (df) gives the probability of having obtained a value less extreme than this point, subtracting the CDF value from 1 gives the P-value. The table below gives a number of P-values matching to χ² for the first 10 degrees of freedom. A P-value of 0.05 or less is usually regarded as statistically significant.

| Degrees of freedom (df) | χ² value [9] | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

1

|

0.004 | 0.02 | 0.06 | 0.15 | 0.46 | 1.07 | 1.64 | 2.71 | 3.84 | 6.64 | 10.83 |

|

2

|

0.10 | 0.21 | 0.45 | 0.71 | 1.39 | 2.41 | 3.22 | 4.60 | 5.99 | 9.21 | 13.82 |

|

3

|

0.35 | 0.58 | 1.01 | 1.42 | 2.37 | 3.66 | 4.64 | 6.25 | 7.82 | 11.34 | 16.27 |

|

4

|

0.71 | 1.06 | 1.65 | 2.20 | 3.36 | 4.88 | 5.99 | 7.78 | 9.49 | 13.28 | 18.47 |

|

5

|

1.14 | 1.61 | 2.34 | 3.00 | 4.35 | 6.06 | 7.29 | 9.24 | 11.07 | 15.09 | 20.52 |

|

6

|

1.63 | 2.20 | 3.07 | 3.83 | 5.35 | 7.23 | 8.56 | 10.64 | 12.59 | 16.81 | 22.46 |

|

7

|

2.17 | 2.83 | 3.82 | 4.67 | 6.35 | 8.38 | 9.80 | 12.02 | 14.07 | 18.48 | 24.32 |

|

8

|

2.73 | 3.49 | 4.59 | 5.53 | 7.34 | 9.52 | 11.03 | 13.36 | 15.51 | 20.09 | 26.12 |

|

9

|

3.32 | 4.17 | 5.38 | 6.39 | 8.34 | 10.66 | 12.24 | 14.68 | 16.92 | 21.67 | 27.88 |

|

10

|

3.94 | 4.86 | 6.18 | 7.27 | 9.34 | 11.78 | 13.44 | 15.99 | 18.31 | 23.21 | 29.59 |

|

P value (Probability)

|

0.95 | 0.90 | 0.80 | 0.70 | 0.50 | 0.30 | 0.20 | 0.10 | 0.05 | 0.01 | 0.001 |

| Nonsignificant | Significant | ||||||||||

See also

- Cochran's theorem

- Degrees of freedom (statistics)

- Fisher's method for combining independent tests of significance

- Generalized chi-square distribution

- High-dimensional space

- Inverse-chi-square distribution

- Noncentral chi-square distribution

- Normal distribution

- Pearson's chi-square test

- Proofs related to chi-square distribution

- Wishart distribution

References

- ↑ M.A. Sanders. "Characteristic function of the central chi-square distribution". http://www.planetmathematics.com/CentralChiDistr.pdf. Retrieved 2009-03-06.

- ↑ Abramowitz, Milton; Stegun, Irene A., eds. (1965), "Chapter 26", Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables, New York: Dover, pp. 940, MR0167642, ISBN 978-0486612720, http://www.math.sfu.ca/~cbm/aands/page_940.htm.

- ↑ NIST (2006). Engineering Statistics Handbook - Chi-Square Distribution

- ↑ Jonhson, N.L.; S. Kotz, , N. Balakrishnan (1994). Continuous Univariate Distributions (Second Ed., Vol. 1, Chapter 18). John Willey and Sons. ISBN 0-471-58495-9.

- ↑ Mood, Alexander; Franklin A. Graybill, Duane C. Boes (1974). Introduction to the Theory of Statistics (Third Edition, p. 241-246). McGraw-Hill. ISBN 0-07-042864-6.

- ↑ Chi-square distribution, from MathWorld, retrieved Feb. 11, 2009

- ↑ M. K. Simon, Probability Distributions Involving Gaussian Random Variables, New York: Springer, 2002, eq. (2.35), ISBN 978-0-387-34657-1

- ↑ Box, Hunter and Hunter. Statistics for experimenters. Wiley. p. 46.

- ↑ Chi-Square Test Table B.2. Dr. Jacqueline S. McLaughlin at The Pennsylvania State University. In turn citing: R.A. Fisher and F. Yates, Statistical Tables for Biological Agricultural and Medical Research, 6th ed., Table IV

- Wilson, E.B. Hilferty, M.M. (1931) The distribution of chi-square. Proceedings of the National Academy of Sciences, Washington, 17, 684–688.

External links

- Earliest Uses of Some of the Words of Mathematics: entry on Chi square has a brief history

- Comparison of noncentral and central distributions Density plot, critical value, cumulative probability, etc., online calculator based on R embedded in Mediawiki.

- Course notes on Chi-Square Goodness of Fit Testing from Yale University Stats 101 class. Example includes hypothesis testing and parameter estimation.

- On-line calculator for the significance of chi-square, in Richard Lowry's statistical website at Vassar College.

- Distribution Calculator Calculates probabilities and critical values for normal, t-, chi2- and F-distribution

- Chi-Square Calculator for critical values of Chi-Square in R. Webster West's applet website at University of South Carolina

- Chi-Square Calculator from GraphPad

- Table of Chi-squared distribution

- Mathematica demonstration showing the chi-squared sampling distribution of various statistics, e.g. Σx², for a normal population

- Simple algorithm for approximating cdf and inverse cdf for the chi-square distribution with a pocket calculator

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||||||||